ml5.js + q5 WebGPU + p5play

ml5.js is a beginner friendly machine learning library, now compatible with q5.js!

If you’ve tried ml5.js in the past and thought it was a bit too crusty, give version 1 a shot: the new API is excellent. It was developed by Daniel Shiffman and other talented folks at NYU ITP.

Student interest in AI and ML is peaking yet again with the release of Deepseek, so ml5 is a great way to get students using this stuff in their own q5.js projects.

Check out my “q5 + ml5” collection!

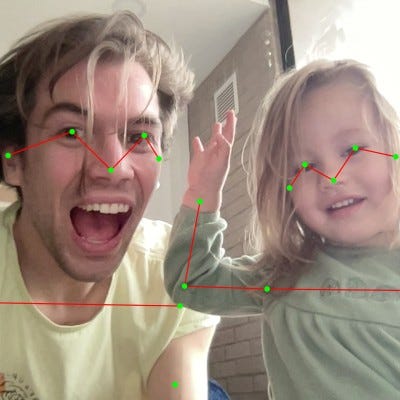

Things get more interesting when we add p5play into the mix! Here’s a demo I adapted from Raj Raizada adding p5play to one of Daniel Shiffman’s faceMesh demo.

p5play + ml5: https://openprocessing.org/sketch/2537705

Recently I added `createCapture` to q5-dom, which most of the ml5 demos require. Video playback in WebGPU was not too difficult to add to the q5-webgpu-image module, once I wrapped by head around external textures.

In other news, q5’s WebGPU renderer now replicates a key feature of the Canvas2D renderer: drawing persists between frames unless cleared or covered with `background`. So I finally was able to add alpha blending to the background of my rainbow loops demo for a shining/trailing effect. `saveCanvas` now works in q5 WebGPU too.

q5.js version 3 is coming soon!